French to English Translator

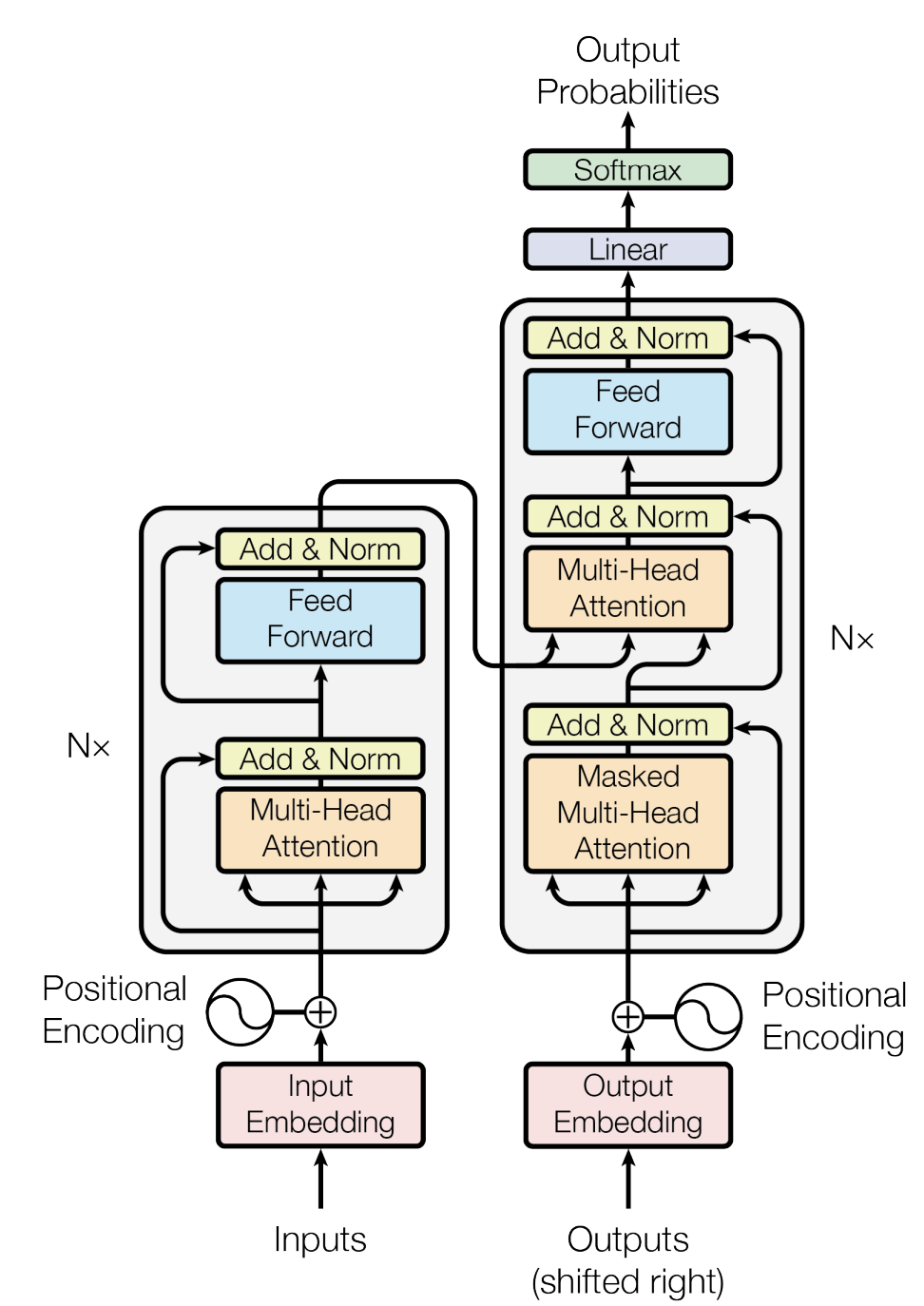

In my Natural Language Computing course (CSC401) at UofT, I trained a French to English Deep Learning model on the Canadian Hansards dataset, following the standard Transformer architecture outlined in the "Attention Is All You Need" paper. This architecture includes encoder blocks using multi-head attention and feedforward layers, as well as decoder blocks using masked self-attention, cross attention with the encoder outputs, and feedforward layers. Standard sinusoidal positional encodings were utilized, and both pre-layer and post-layer normalization blocks were trained and evaluated. Below is a summary of the model architecture.

Both greedy and beam search decoding methods were implemented to translate a source sequence into a target sequence using the trained model. Finally, to evaluate translations, the BLEU score was calculated between sample sequences to measure the model's performance.